Natural Language Processing (NLP) and transformer models are frequently used when discussing AI; however, they’re not identical. NLP can be described as a vast area of artificial intelligence that focuses on helping machines comprehend and interpret human language. It encompasses a range of methods, from traditional rules-based approaches to more advanced deep learning methods.

Transformer models, on the other hand, are a distinct kind of deep-learning structure that has transformed NLP because of their capacity to process large volumes of text quickly. Models such as BERT and GPT employ self-awareness mechanisms to comprehend language context more effectively than earlier methods.

In this article, we’ll look at the major distinctions between NLP as an academic field and transformer model integration used as a technological tool. We will also look at the ways that transformer models have benefited NLP applications, their advantages over older methods, and actual use cases that demonstrate the impact of AI on modern language processing.

What is Natural Language Processing (NLP)?

Natural processing of language (NLP) refers to the science of developing machines that manipulate human language or data similar to human language in the way it’s spoken, written, and organized. It results from computational linguistics, which employs computer science to study language fundamentals; however, rather than constructing conceptual frameworks, NLP is an engineering discipline that aims to develop technology that can perform useful tasks.

NLP can be classified into two distinct subfields: natural language understanding (NLU), which is focused on semantic analysis, and the determination of the significance of text. The other is the natural speech generation (NLG), which focuses on creating text through a machine. NLP is distinct from and frequently used alongside speech recognition. It attempts to convert spoken words into words, transforming speech into text and reverse.

How NLP Works?

Let’s examine the various mechanisms at the heart of the natural processing of languages. We’ve included links to sources that will aid you in learning more about certain of these areas.

Components of NLP

Natural Language Processing is not a single one-way approach; it comprises multiple components, all contributing to the understanding of the language. The primary components NLP is trying to comprehend are syntax, semantics, pragmatics, and discourse.

Syntax

Syntax refers to arranging phrases and words to form well-structured sentences in the language.

Take the example of “The cat sat on the mat.” Syntax is the process of analyzing the syntax of this sentence and ensuring it is in line with the rules of grammar in English, including the subject-verb agreement as well as proper word order

Semantics

Semantics is about understanding the meaning of words and what they mean when used in sentences.

In the phrase “The panda eats shoots and leaves,” semantics can help discern whether the panda eats plant matter (shoots or leaves) or is in the middle of an act of violence (shoots) before escaping (leaves) depending on the nature of the sentence in the text and its context.

Pragmatics

Pragmatics is the study of different languages in various situations, ensuring that the intended meaning is drawn from the context, the speaker’s intention, and the knowledge shared.

When someone asks, “Can you pass the salt?” Pragmatics is the art of knowing that it’s more of a request than a question regarding one’s capacity to pass the salt and interpret the speaker’s meaning based on the context of dining.

Discourse

Discourse is focused on the study and interpretation above the sentence level, on how sentences are connected to one another in texts and conversations.

In a discussion where one person states, “I’m freezing,” and the other responds, “I’ll close the window,” conversation requires knowing the coherence between two statements and recognizing that the second statement is a reply to an implied request made in the initial.

Understanding these elements is essential for anyone interested in NLP since they constitute the basis of how NLP models understand and create human language.

NLP Techniques and Methods

Many NLP techniques allow AI instruments and equipment to communicate with human language and process it in relevant ways. This could include tasks such as analyzing the voice of the customer (VoC) information to discover specific insights, removing social listening data to minimize the noise, or automatically translating product reviews to help you improve your understanding of the global audience.

The following methods are typically employed to complete these jobs and more:

Semantic Search

Semantic search lets computers interpret the user’s intent without relying on keywords. These algorithms are used in conjunction with NER, knowledge graphs, and NNs to give extremely accurate results. Semantic search is the basis of applications like smartphones, search engines, and social tools.

Content Suggestions

Natural language processing (NLP) provides content recommendations using ML models to understand context and produce human language. NLP uses NLU to interpret and analyze data, while NLG produces relevant and personalized content suggestions for users.

Text Summarizations

Text summarization is a sophisticated NLP technique that condenses information automatically from long documents. NLP algorithms produce summaries by paraphrasing text to make it different in content from the text but provide all the necessary details. It includes sentence scoring, clustering, and content and sentence location analysis.

Machine Translations

NLP is a machine-based method for automatically translating speech or text from one language to the next. It employs a variety of ML tasks, such as tokenization and word embedding, to understand the semantic connections between words and aid translators in comprehending their meaning.

Entity Recognition

Named recognition of entities (NER) recognizes and categorizes names of entities (words or words) from text. These entities represent brands, people, places, dates, numbers, and other categories. The NER function is crucial to all kinds of data analysis to gather intelligence.

Machine Learning (ML)

NLP can be employed to train machine-learning algorithms to determine entity labels by analyzing features such as words embedded in speech, part-of-speech tags, and other contextual information. Neural networks used in ML models rely on this labeled data to discover patterns in unstructured texts and then apply this knowledge to new information to learn.

Sentiment Analysis

Sentiment analysis is one of the most popular NLP techniques for analyzing the sentiment expressed in texts. AI marketing tools utilize sentiment analysis to power a variety of business applications, including market research, customer feedback analysis, and social media monitoring, to help brands learn their customers’ opinions of their goods, services, and brands.

Question Answering

Using an informal style, NLP allows question-answering (QA) models inside computers to recognize and respond to queries in natural language. QA systems analyze data to find relevant information and give accurate responses. The most popular instance of this is chatbots.

Why is NLP Complicated?

Natural processing of language (NLP) is confronted with a myriad of problems that stem from the complexity and variety of human languages:

Understanding Synonyms

NLP models must discern similar words and/or phrases and discover subtle variations regarding their significance. For instance, even though “good” and “fantastic” mean something positive, the intensity of positive emotion differs. Therefore, it is vital to ensure that models recognize such distinctness, particularly from a sentiment analysis perspective.

Language Variations and Dialects

An NLP system has to be compatible with many dialects and languages, including regional dialects and slang. This becomes more apparent when discussing a single language, such as English, in which dialects of the region, like British English and American English, differ. It is also important to recognize that a specific industry vocabulary requires the creation of suitable NLP models.

Ambiguity and Context

It can be difficult to grasp how certain word meanings or phrases are unclear. It is essential to choose words that can have many meanings. For example, “pen” can refer to a writing instrument or an area where animals reside.

In the same way, words like “flex” can have various meanings based on employees’ ages. For NLP models, this issue is dealt with by using methods such as part-of-speech tags for context evaluation.

Sarcasm and Irony

Irony and sarcasm are among the most difficult things for algorithms to comprehend when they deal with natural language. Numerous studies have been conducted to solve this problem, including the mixed neural network method, but the issue persists because figurative language is highly context-sensitive.

Training Data

Another issue is when an enormous amount of data has to be noted, like the annotation of figments of imagination or words that are rarely used. Insufficient training data for these aspects could lead to poor models.

Top Use Cases of Natural Language Processing

Natural Language Processing (NLP) has found many applications across a variety of industries, transforming how businesses function and interact with their customers. Here are some of the most important applications in the NLP industry.

Finance

Financial institutions use NLP to conduct sentiment analysis on various textual data, such as financial reports, news articles, and social media posts, to determine market sentiment about particular markets or stocks worldwide.

Algorithms analyze the frequencies of words, both positive and negative, and, using machine learning models, forecast possible effects on the price of stocks or market movements, assisting investors and traders in making more informed choices.

E-Commerce

NLP greatly enhances the on-site search functions on online stores by recognizing and understanding user queries, even if they’re written conversationally or include typos.

For instance, if a user searches for ” blue jeans,” NLP algorithms correct typos, recognize the intention, and provide appropriate results related to “blue jeans,” guaranteeing that users can find what they’re seeking, even with inexact queries.

Healthcare

NLP aids in transcribing and organizing notes from clinical sessions to ensure accuracy and efficiency in recording patient data. For example, doctors might write notes on their patients, which the NLP systems convert into text.

Advanced NLP models further categorize data and identify symptoms, diagnoses, and prescribed treatments, thus streamlining the documentation process, reducing manually entered data, and increasing the precision of electronic health records.

Customer Service

Chatbots powered by NLP have revolutionized customer service by delivering instant, 24-hour responses to customer questions. These chatbots can understand customer inquiries via voice or text, interpret the message, and offer accurate responses or solutions.

For instance, a client may want to know their order status, and the chatbot, which is integrated into the system for managing orders, provides the most current status, increasing customer satisfaction and reducing support load.

Legal

In the field of law, NLP is utilized to automate document review, which significantly reduces the manual labor involved in sorting through a large number of related documents. For instance, litigating lawyers need to examine a multitude of documents to discover pertinent details.

NLP algorithms can scan documents and identify relevant information, such as specific dates, terms, or clauses, thus making the review process more efficient and ensuring no crucial information is missed.

What is the Transformer model?

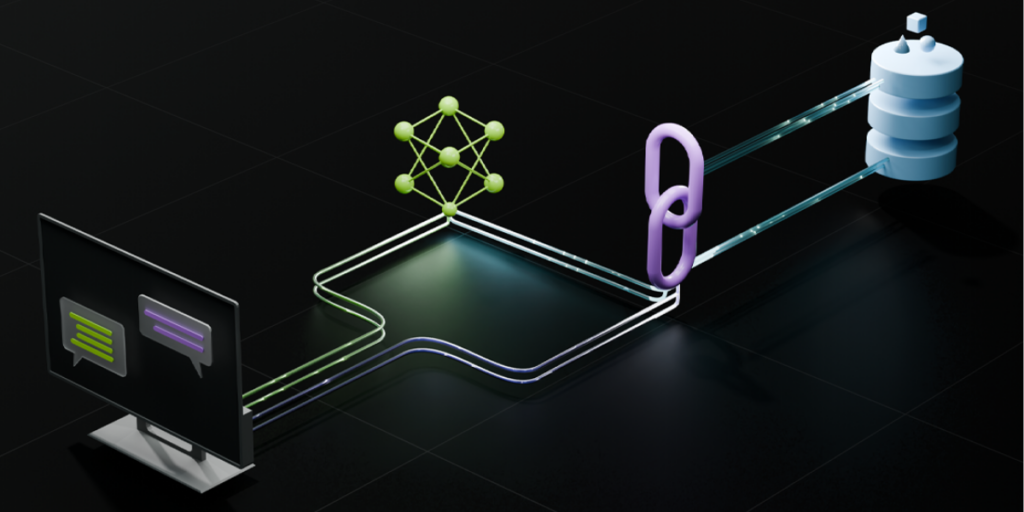

Transformers are neural networks that acquire context and understand by analyzing data sequentially. The Transformer models utilize a contemporary and ever-changing mathematical concept commonly referred to as self-attention or attention. This set enables us to understand how data elements from different locations influence and interact with each other.

Transformers were first introduced in the 2017 Google report as one of the top models ever created. This has led to an explosion of advancements known as “Transformer AI” in machine learning.

In a study published in August 2021, Stanford scientists discovered that Transformers are “foundation models” because they believe they can alter the nature of artificial intelligence.

In a report released recently, the researchers remarked that foundation models have exceeded our expectations for dimensions and scope in the past couple of years. They referred to this as the “sheer scale and scope of foundation models over the last few years have stretched our imagination of what is possible.”

What Is the Transformer Model in AI?

The transformer model could be described as a deep learning model that is typically used in the processing of sequential data, such as natural language.

It was unique because the Transformer model was distinctive and revolutionary in that it differed from the traditional use of convolutions and recurrence, previously employed for sequential data processing. Instead, it employs an algorithm called “attention” to weigh the impact of the different components of the input during the process of each output portion.

The Transformer Model is built to recognize the meaning and context of a sentence. It can recognize the connections between words, even if they’re not in the same space. This has enabled AI systems based on the Transformer design to reach an unparalleled comprehension of human language, surpassing human understanding in certain instances.

Transformer models are considered the latest technology in a wide range of areas related to natural language processing (NLP) and, in particular, big language models (LLMs).

The Architecture of a Transformer Model

The transformer model architecture consists of multiple layers, each of which plays an important role in processing text sequences.

Positional Encoding

Contrary to RNNs, unlike RNNs, the Transformer model is able to process all words in a sentence at once. Although this processing increases the effectiveness of the model, it creates a problem because the model is unable to comprehend the sequence or location of the words within the sentence.

Positional encoding is a method that gives the model details about the location of the words within the sentence. It adds the input with a vector embedding that represents the word’s location within the sentence. So, although the Transformer is able to process all words at once, it can still recognize the word order.

Feed-Forward Neural Networks

After the multi-headed self-attention mechanism, the feed-forward neural network (FFNN) processes the output. The network consists of two linear transforms and a ReLU (Rectified Linear Unit) activation mechanism.

The FFNN is applied separately to every position and can process the output of the mechanism of self-attention. This layer adds more complexity and depth to the process.

Output Layer

This layer ultimately produces the output from the model. For tasks such as generating text or translation, the layer typically consists of a softmax function, which produces a probability distribution across the vocabulary to predict the word that will be next.

The output layer combines all the layers’ computations to create an end-product. The output could be a translated phrase, a summary of an article, or an additional NLP task performed by the Transformer model has been capable of performing.

Input Embedding Layer

The model is initially fed custom data pipelines that is later converted into numerical vectors by the layer of input embedding. The numerical vectors, also known as embeddings, represent the words in a format that the model can comprehend and process.

In the embedding layer, you can map every phrase in the sequence into an extremely high-dimensional vector. The vectors can capture the meanings of words. Words with similar meanings have vectors near one another based on their vector space. This layer allows the models to recognize and process the language data.

Multi-Head Self-Attention Mechanism

After encoding positional information, the model processes the information using the multi-head self-attention system. This mechanism lets the model concentrate on different aspects of every word’s input, which gives it the ability to recognize the context of words used in sentences.

The self-attention mechanism operates by assigning weights to every word of the phrase based on its importance to the other words. The weights decide how much focus the model will give each word while processing a specific word. The term “multi-head” means the model is equipped with multiple self-attention mechanisms or “heads,” each focusing on distinct aspects of the data input.

Normalization and Residual Connections

Regularization and residual connections help stabilize the learning process and improve the quality of the neural network used to produce output.

Normalization standardizes inputs to subsequent layers, thus reducing the time to train and enhancing the efficiency of the models. Residual connections, also known as skip connections, allow the gradient to be transferred directly from the input to the output without going through the transformation. This enables you to create a stronger neural network with more layers and not have to face the problem of gradients disappearing.

What Can Transformer Models Do?

Transformer Models are used extensively in AI. They are used in a variety of NLP tasks, such as word translation, sentence summarization, dialogue systems, and even text generation. For example, in machine translation, Transformer models can translate a whole sentence in a single go instead of word for word while preserving its original context and meaning.

In the realm of text generation, Transformer models have demonstrated the ability to produce consistent and relevant text from textual requests. They’ve been utilized in writing essays, composing poetry, and creating working code.

The most prominent illustration is OpenAI’s collection of GPT models, which exploded into the public domain by releasing ChatGPT, the ChatGPT AI Chatbot. ChatGPT and its two underlying models, GPT 3.5 and GPT 4, are based on the Transformer model, which can generate human-like texts that are nearly identical to those written by humans. The next generation of models uses Transformer architectures to analyze texts and images in a multi-modal operation.

Additionally, Transformer models’ capacity to deal with long-range dependencies is a great fit for a variety of applications. For instance, in bioinformatics, they can predict the structure of proteins by identifying the relationships with far-off amino acids. For finance applications, they could be utilized to study time-series data to determine the price of stocks or to identify fraudulent transactions.

Common Types of Transformer Models

There are many kinds of Transformers. Here are the most commonly used models.

Generative Pretrained Transformers

Generative Pretrained Transformers (GPTs), like OpenAI’s GPT series, are focused on producing meaningful and coherent text. The models are trained on large corpora of text in a non-supervised manner and can predict the word that will be next in a set of words.

After their pretraining, GPT models can be tuned to specific tasks. They are appropriate for tasks such as text completion, translation, summarization, and creative writing and can produce human-like texts.

Transformers for Multimodal Tasks

Transformers that can handle multimodal tasks incorporate data from various types of data, including images and text, to accomplish tasks that require an understanding of both modalities. Models such as CLIP (Contrastive Language Image Pretraining) and DALL-E from OpenAI utilize transformers that link the textual description with the images, enabling applications like the generation of images by using text prompts and captioning images.

These models offer new opportunities for AI in areas such as creating content, visual query answering, and cross-modal search.

Bidirectional Transformers

Bidirectional Transformers like the BERT (Bidirectional Encoder Representations of Transformers) can comprehend the meaning of a word in relation to the words surrounding it in both directions.

Contrary to conventional language models, which forecast the word to come in sequential order from left to right, BERT examines all sentences simultaneously, resulting in an enhanced understanding of the meaning of words. This bidirectional approach enables BERT to perform better across a range of NLP tasks, such as answering questions, sentence classification, and recognition of named entities.

Bidirectional and Autoregressive Transformers

Models such as T5 (Text-to-Text Transfer Transformer) incorporate the advantages of bidirectional and autoregressive methods. T5 considers each NLP job a text generation issue and can perform a variety of tasks by changing it into text-to-text format.

It utilizes a bidirectional understanding of context to input text and autoregressive decoding to generate output text, which makes it flexible and suitable for jobs like translation, summarization, or answering questions.

Vision Transformers

Visual Transformers (ViTs) utilize the transformer model for image-processing tasks. Instead of utilizing convolutional layers as conventional CNNs, ViTs treat images as patches in a sequence and use self-attention algorithms to determine connections across all images.

This method allows ViTs to record global context and long-range dependencies between images, resulting in superior performance in object recognition, image classification, or segmentation. Vision Transformers demonstrate the versatility of the transformer’s design over text-based applications.

Top Applications of Transformer Models

Transformer models have been used to develop new technologies. They have found applications across a wide range of fields, offering innovative model interpretability solutions and increasing the efficiency of current systems.

Text Generation

When you type in a question into the search engines, and it automatically fills in the remainder part of your sentences, it is likely to be caused by the Transformer model. By analyzing patterns and sequences within the input data, it is possible that the Transformer can anticipate and create meaningful and contextually relevant texts. The Transformer is utilized in many applications, from chatbots to auto-complete email features or virtual assistants.

Advanced models like OpenAI GPT and Google PaLM are the basis for the latest consumer applications, such as ChatGPT and Bard. They utilize the Transformer structure to create human-like language and code based on natural language-based prompts.

Named Entity Recognition

Named Entity Recognition (NER) is a process within Natural Language Processing (NLP) that involves identifying and separating entities in texts into categories such as names of people or organizations, places, expressions of times and quantities, etc. It is based on the Transformer model and, thanks to its self-awareness mechanism, can detect these entities in the most complex sentences.

Machine Translation

You’ve probably used online translation tools such as Google Translate before. Modern machine translation methods primarily use Transformers. They use attention mechanisms to comprehend the semantics and context of words across different languages, thereby allowing a better translation than earlier models.

The Transformer’s ability to manage lengthy information sequences makes it proficient at this job and allows it to translate whole sentences with incredible precision.

Sentiment Analysis

Sentiment analysis is a method businesses use to learn about customer opinions and feedback. A Transformer can analyze textual data like product reviews or social media posts and the tone of the content (for instance, positive, negative, and neutral). Through this process, businesses on a larger scale can gain useful information regarding their products or services and make informed choices.

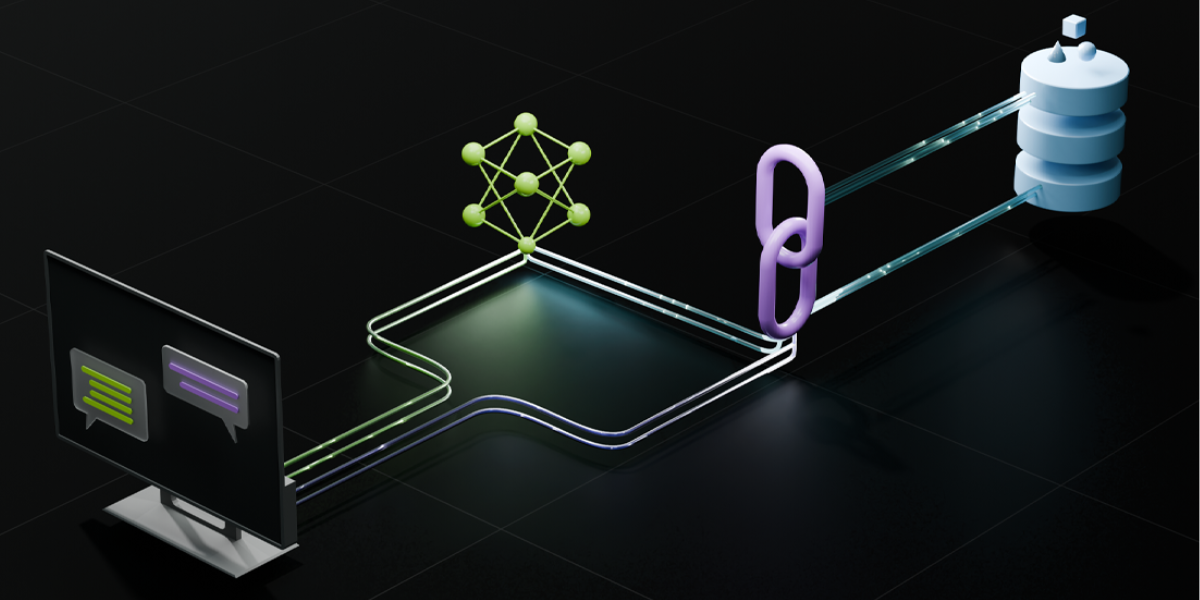

Major Differences Between NLP and a Transformer Model

Natural Language Processing (NLP) and transformer models are connected but play different roles in artificial intelligence. NLP is the broad domain that enables machines to comprehend and create human language. Transformer models are a particular kind of deep-learning technology that powers various current NLP applications. Understanding the key differences between them can help identify their specific roles, strengths, and potential applications.

Definition and Scope

NLP is a broad area of artificial intelligence that covers diverse methods used to analyze and process human language. It encompasses methods like rules-based linguistic approaches, statistical models, and deep learning-based systems. NLP applications vary from basic tasks like spell-checking to more complicated methods like machine translation and conversational AI.

However, Vaswani and co. first introduced a specific deep-learning framework in 2017 in the article “Attention is All You Have.” Transformers are a kind of neural network that excels at processing sequenced data, making them especially effective in NLP tasks. In contrast to earlier models, transformers employ self-attention to detect the contextual connections between words in sentences, resulting in better performance in tasks like text summarization, sentiment analysis, and even answering questions.

Core Functionality

NLP is the theoretical base and practical tool enabling machines to interpret human language. It covers a broad range of subjects, including semantics, syntax sentiment analysis, machine translation, and text classification. The traditional approach to NLP was based on rules-based techniques and statistical techniques, but thanks to advances in AI deep-learning models, which include transformers, several NLP tasks have been taken on.

Transformer models represent a particular technological advance in NLP. They have changed the way that machines process text and interpret it by introducing attention mechanisms that permit the analysis of complete sentences simultaneously instead of processing words in a sequential manner. This improves effectiveness and increases understanding of languages, especially for text with long forms.

Processing Mechanisms

The older NLP models, like Recurrent Neural Networks (RNNs) and Long Short-Term Memory Networks (LSTMs), processed text sequentially. This resulted in them being slower and less efficient, particularly when dealing with lengthy texts, as they struggled to maintain dependencies over a long time.

On the other hand, transformer model development follows self-attention mechanisms and encoders to process all the words of the sentence simultaneously. This allows them to process huge quantities of text more quickly while maintaining the accuracy of the context. Also, it eliminates the vanishing gradient issue that previous sequential models could not solve and improves efficiency when using NLP applications.

Practical Applications

NLP is a discipline used in various sectors, such as finance, healthcare customer service, healthcare, and marketing. Old NLP methods are still utilized for keyword extraction, basic sentiment analysis, and entity recognition tasks.

Transformer models have greatly enhanced the performance of NLP-based apps. Models such as BERT GPT and T5 are used in tasks like chatbot development, document summarization, automated translation, and even content creation. Their ability to comprehend context at a higher level makes them extremely useful in today’s AI-driven environment.

The Key Takeaway

NLP and transformer models are incredibly interconnected, yet they play distinct roles in AI-driven language processing. NLP is a broad area that encompasses a range of methods that allow machines to comprehend and produce human language. Transformer models are an innovative deep-learning technology that has greatly enhanced NLP tasks through their self-attention mechanism and capabilities for parallel processing.

With the advent of transformers such as BERT and GPT, the precision and effectiveness of NLP applications have risen to new levels. This has made tasks like machine translation, text summarization, and conversational AI more sophisticated than ever. However, traditional NLP techniques continue to play an important role in simple applications where deep learning might not be needed.

Understanding the distinction in NLP for a specific field and its use as an instrument within it can help developers and businesses make informed decisions regarding the use of AI to perform tasks that require language. As AI is evolving and transforms continue to advance, transformers will be ahead of NLP technological advancements.